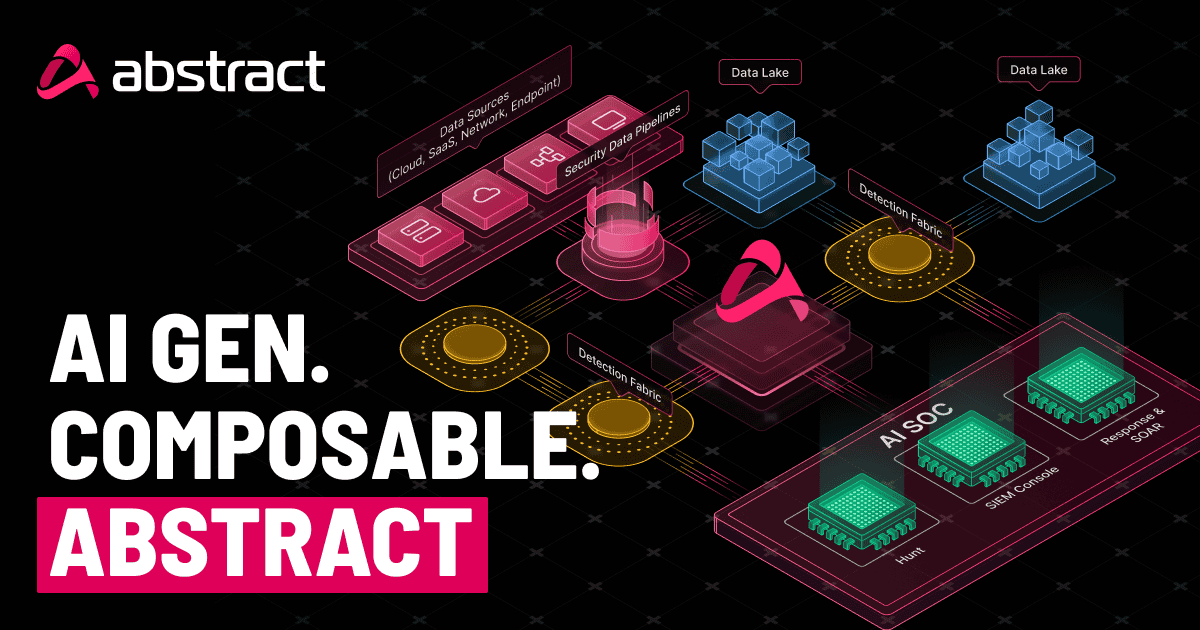

Integrating Security Data Pipelines with Detection

To AI, or not to AI, that is the question I feel is plaguing most “thought” leadership pieces as we are asked to write them from time to time. Do I use it to save myself the bandwidth? Do I make the time? Or do I act like Sauron and into this AI, he poured his half-formed thoughts, his impostor syndrome, and his will to ship this blog post by Friday.

O, sorry, let me introduce myself! I am Brandon Borodach, a Field CTO here at Abstract Security. I have been in the SIEM space for what feels like a lifetime and have worked on almost all of them at some point in my career. I recently finished a five-year stint at Sumo Logic where I was successful in helping customers migrate from their current SIEM (Exabeam, Splunk, CrowdStrike, etc) to Sumo Logic. It was a fun time but I started to notice a recurring pattern in these SIEM migrations: that while migrating the data was obviously important (and one of the things we made money on) something that was a consistent question was, “How are you going to migrate my detections?”

It is a valid question, the detection team just spent months or sometimes longer with tuning, change control, waiting for data to be onboarded and then here comes Brandon saying “I know you just built it and it works great, but your leadership wants you to look at my solution to save money” 🥴.

I took some time off from my gig at Sumo and started to do some research on the “next” thing in the security space that I could use to solve real problems that people struggled with. I saw two big opportunities that I thought were relevant enough to put time into research: Federated SIEMs and Security Data Pipeline Platforms.

I decided on the pipeline, and I hope to explain in the rest of this blog as to why! In recent news, there was a wave of acquisitions that prove pipelines clearly aren't optional anymore. CrowdStrike bought Onum. SentinelOne acquired Observo AI. Palo Alto Networks picked up Chronosphere. All within months of each other. The question now is whether you treat your pipeline as plumbing or as a security engine?

I think most organizations are still treating it as plumbing where data moves from point A to point B. Maybe some filtering happens along the way to help reduce cost but then the detection lives somewhere else, usually in a SIEM, buckling under that volume and cost. Some organizations then have even more complexity because their scale is so large that they have TBs or PBs of data bifurcated into multiple data lake platforms which has introduced another market, Federated. The Federated model, which I will touch on more later in this blog, is interesting but I personally feel (and have many colleagues in the industry who tell me they feel similarly) that it’s not the best model to solve the maximum number of SIEM challenges.

The real opportunity is combining security data pipelines with built-in threat detection inline. Not as separate layers. Together. This integrated approach improves signal-to-noise ratio, cuts costs, and accelerates response. More importantly, it allows customers to not have to migrate the detections repeatedly when leadership decides to migrate SIEMs because of cost. Recently, with a POV I just completed we had the prospect say “the further we shift left with abstract on our tech stack diagram, the more money we save”. In another words, saving cost and increasing functionality.

In this post, I'll explain why pairing your pipeline with real-time detections beats the traditional bifurcated model (pipeline solutions today); how abstract enriches telemetry with context that helps analysts and levels the entire team up; and how we can help solve the federated SIEM problem and leverage data lakes without the wrangling headache.

Why Detection Belongs in the Pipeline

Most pipeline solutions today treat detection as someone else's problem. Data comes in, gets filtered, maybe normalized, and then ships off to a SIEM where the real work happens. That made sense when pipelines were just plumbing. But it creates a fundamental issue: you're still paying the SIEM to do the heavy lifting, and you're still stuck migrating detections every time the decision to switch platforms is made. To be clear, there are great reasons to switch platforms. But I’ll admit, during my practitioner days I was always a little gremlin when I was asked to look at migrating detections from one platform to another.

Here's what I learned from years of SIEM migrations on the vendor side (ArcSight -> Splunk, Splunk -> Sumo and Any SIEM -> Sumo): while not a walk in the park, the data move is the easy part. The hard part is the detections. Detection engineers spend months tuning rules, working through change control, or writing custom sigma.py backends because they like pain (shoutout to Joe Blow in the detection engineering community) or, worse, because they’re waiting on data to be onboarded. I hate to say it, even times when the data is onboarded and seemingly ready to use, it is either parsed incorrectly or important fields were dropped to “save” cost. Then someone like me shows up saying "great news, your leadership talked to us at Blackhat and we're looking to move you to our platform to save money" and suddenly all that work is at risk. It's demoralizing for the team and expensive for the business.

If able, when you build detection directly into the pipeline, you can break this cycle! Your detection logic lives upstream of any SIEM (Shift Left!).

... Sorry, quick tangent, I remember when Shift Left came out for the ‘DevSecOps’ world and I was like “ok, when will this happen for SecOps?” and now I am at the company pioneering it!

Ok, back to the point. Do you want to swap out Splunk for Sentinel? Chronicle for Sumo? Your detections no longer care. Add a new data lake for long-term storage? Still works. The pipeline becomes the source of truth for detection, not the downstream tool that happens to be in favor this quarter.

I would even go as far to say that there's a performance argument too. When detection runs in the data stream, you catch threats as they happen, not hours later after indexing. Specifically, I want to call out a pain point that I had experienced managing detection content for large traditional SIEMs (10+ TBs a day), which is skipped searches.

If you are unfamiliar, one of the dirty secrets of legacy SIEMs is what happens when the system gets overloaded: scheduled searches just don't run. Your detection rule that's supposed to fire every five minutes? If the platform is under strain from ingestion volume or competing queries, it quietly skips that execution window. No alert. No notification that your coverage had a gap. Just silence. In fact, somewhere in my pile of USBs, I have a dashboard saved that was written just to micro-manage when to save my searches and on what cron minute so that I could minimize this impact.

This is a real problem for security teams who assume their detections are running continuously. You built the rule. You tuned it. You got it through change control. But if the infrastructure can't keep up, that rule becomes decoration. Threats that occur during skipped windows slip through undetected, and you may never know it happened until you dig through platform health logs that most analysts never look at.

An example use case: let’s say instead of forwarding 100,000 raw authentication logs and letting a SIEM figure out which ones indicate a brute force attempt, or building a few building block rules that then “tie” these things together (potentially causing more skipped searches or introduction more complexity), a tool with integrated pipeline, like Abstract, identifies the pattern in flight and sends on one summarized alert directly to your SIEM, SOAR or Data Lake for more processing. Not a flood of raw events. That "shift left" approach surfaces alerts faster and reduces the noise that ever reaches your SIEM.

I think architecture is simplified as well. When things are bifurcated it creates complexity: a log pipeline feeding, a SIEM with its own query language, feeding a SOAR for response. There are handoffs everywhere and potential blind spots between tools. With Abstract, we collapse this which simplifies it for security teams and is overall less maintenance split between tools. I think more importantly, your detection investment stays with you regardless of where the data ultimately lands.

Enrichment That Actually Helps: Leveling Up the Entire Team

Probably about my 3rd or 4th day at Abstract, prospects were consistently bringing up the pain points of enrichment or context or automation to level up the team. On their own, raw logs are great and some vendors are better than others with providing context regarding the event. But let’s face it, a lot of times we are taking that one log and then pivoting to two to three other tools to get the “full story” or context.

A great example is firewall logs, they tell us what happened with the traffic or potential threat (depending on vendor) but how do you correlate that firewall traffic with the user? Or the host? Sometimes all you get is a 10.5.1.2.. Ok, who does that belong to? So then the analyst, needs to take that IP and do a wildcard search to figure out where else that IP has been seen and try to get a clue of where to pivot… Did I see it in my Windows logs? What about my EDR? If they are lucky, they can find this information in 2-3 minutes but it’s a manual step every single time. That is partially why SOAR tools were created, to help automate those steps into repeatable playbooks that can trigger when the alert is fired. It makes a lot of sense and I think it is a unique approach but again it is AFTER the fact… And what if some of the data, because it is voluminous, lives in a cheaper storage and the other half lives in SIEM? More coding.

In a recent win with a customer, we solved this use case in a unique way. They had a telemetry source (BloxOne) that had a consistent mapping of username, hostname, external_ip and internal_ip. BloxOne logs after each DNS request so this identify info was up to date to the seconds. Through our pipeline approach, we were able to enrich all the customer's firewall and flow logs with this the telemetry at the time of the event when it happened, not at search time. Why does that matter? Imagine the analyst gets to an alert after a DHCP lease has already changed. Without time-accurate enrichment, attribution becomes guesswork. In this model, the identity data is captured at ingest, stored immutably, and preserved for historical analysis and forensics. If something goes wrong and they need to reconstruct what happened, the attribution is already locked in.

What about threat intelligence? Let’s say you are using Abstract and you just completed the use case above and now you have users and hostnames in your firewall logs. Is that IP known-bad? Is that user a domain admin or an intern? Is that asset a production server or a test box someone spun up and forgot about? Without that context, your analyst is tab-switching between five different tools trying to piece together what they're looking at. So with Abstract also performing threat intel enrichment inline by the time your analyst sees the alert, it already says "this IP is associated with APT29" or "this domain was flagged by your TI vendor yesterday." No pivot required.

There are other use cases that our customers have leveraged this enrichment for as well: CMDB enrichment. This is the stuff that separates a junior analyst staring at an alert from a senior analyst who immediately knows the blast radius. When you can enrich an event with "this is a finance server" or "this user is a member of Domain Admins" or "this asset is in scope for PCI," you are providing the analyst with enough data to make an immediate judgment call or at least better enable them to execute certain playbooks. You're telling the analyst why these matters.

I've worked with enough SOC teams to know what happens without this, and this problem is compounded with MSSPs. Tier 1 analysts escalate everything because they don't have the context to make a call. Tier 2 spends half their day answering questions that could have been answered by the data itself. Tier 3 is frustrated because they're constantly pulled into triage instead of doing actual threat hunting. It's inefficient and it burns people out.

When you enrich at the pipeline level, you level up the entire team. Your Tier 1 analysts can make smarter decisions because the alert tells them what they need to know. Your senior folks spend less time answering, "is this important?" and more time on actual investigation or threat hunting.

And for the leadership folks, if you are still here, the part that matters is that enrichment travels with the data. Whether it lands in Splunk, Sentinel, Snowflake, or all three, the context is already there. You're not paying each downstream tool to do the same enrichment. You're not maintaining separate lookup tables in five different places. You do it once, in the pipeline, and every consumer downstream benefits.

Solving the Federated SIEM Problem

Earlier I mentioned that I was researching two areas before joining Abstract: pipelines and federated SIEMs. These aren't unrelated problems. In fact, I'd argue the pipeline is how you solve federated search without the pain.

Here's the reality for large enterprises: you don't have one SIEM. You have three and then a data lake on the side like Snowflake or Databricks because the analytics team uses that as well. Splunk over here because the security team likes it. Sentinel over there because Microsoft gave you a deal. Chronicle in another business unit because someone's buddy worked at Google. Maybe Elastic in the OT environment because it was "free." Each platform has its own schema, its own field names, its own way of representing the same data. A source IP might be src_ip in one, source.address in another, and SourceIP in a third.

The federated search tool’s promise is to allow you to “leave the data where it is and run your detections” to unify these detections into a single platform. I think this sounds great in the demo and in theory but having seen firsthand how this is operationalized, it is a NIGHTMARE. The reason is normalization. Or rather, the complete lack of industry standardization around it.

We've been arguing about this for 20 years. CEF. LEEF. CIM. OCSF. ECS. Every vendor pushes their own schema because it creates lock-in and therefore stickiness. Your detection content, your dashboards, your reports all depend on field names. Change the schema, break the content. It's a trap!

So how do the federated tools try to solve this? A few approaches, none of them great. Some are betting on AI to auto-translate queries at search time. Take your Splunk SPL, magically convert it to KQL for Sentinel, hope it works. Insert the whole sigma py backend reference again… Sometimes your query returns garbage because the AI didn't understand the nuance of what you were looking for. And you're paying for that compute every single time you search and paying a vendor to iterate quickly and solve this with AI.

Others use macros or field aliasing at search time. Define a mapping layer that translates field names on the fly. This works until it doesn't. The maintenance burden is real. Every new data source means updating your mapping tables. Every schema change in a downstream platform means debugging why your searches broke.

The worst part? Storage is a commodity now. S3 is cheap. Snowflake storage is cheap. Databricks storage is cheap. These platforms make their money on compute. Every time you run a query that must transform, translate, or wrangle data into a usable format, you're paying for it. That search-time normalization isn't free. It's a tax you pay every single time someone runs a query.

Here's the Abstract approach: normalize once, upstream, in the pipeline. Before data ever hits your data lake or SIEM, it's already in a consistent schema. You pick the schema. OCSF? Sure. ECS? Fine. Your own custom format because you've been using it for ten years and don't want to change? We can do that too.

The key is that normalization happens at ingestion time, not search time. The compute cost is paid once. The data lands in your lake already formatted, already enriched, already ready for analysis. When your federated search tool queries across Snowflake and Sentinel and wherever else, it's querying consistent data. Field names match. Schemas align. The searches actually work!

And here's what I think is the real unlock: because we normalize in the pipeline, you can change your mind later without breaking everything downstream. Want to switch from CIM to OCSF? Update the pipeline config. Your historical data stays as-is, your new data comes in with the new schema, and you can run a backfill job if you need consistency. Your detections don't break. Your dashboards don't break. Your analysts don't spend a month fixing field references.

This is the unsexy infrastructure work that makes federated search actually viable. It's not about building a smarter query translator. It's about ensuring the data is clean and consistent before it ever needs to be queried.

Wrapping up

If you’ve made it this far, thanks for sticking with me and dealing with my jokes in between. I know it was a lot, but I genuinely believe this is the direction the industry is heading and I wanted to lay it out.

The summary is simple: pipelines aren’t plumbing anymore. They’re the control plane for your security data. If you just see them as a cost optimization layer, then you’re leaving value on the table.

When you integrate detection into the pipeline, you stop the endless cycle of migrating rules every time leadership picks a new SIEM. When you enrich at ingestion, you level up your entire analyst team without hiring more people. When you normalize upstream, federated search actually works and you stop paying compute taxes every time someone runs a query.

This isn't theoretical. We're doing this with customers today. The prospect who told me "the further we shift left on our tech stack diagram, the more money we save" wasn't speaking hypothetically, they saw it with their own data and got to implement it themselves.

I've spent my career helping organizations migrate SIEMs and I've seen the pain firsthand. The detection engineers who spent months building content only to watch it get thrown away or re-created again. The analysts drowning in alerts without context or stuck building python playbooks. The security leaders stuck choosing between visibility and budget. We’ve been migrating SIEMs the same way for years. Like stormtroopers, we keep missing the real target.

What's Next?

If any of this resonated, I'd love to chat. Whether you're drowning in SIEM costs, struggling with multi-platform complexity, or just want to see what "shift left" looks like in practice, reach out.

You can find me on LinkedIn or shoot me an email at brandon@abstractsecurity.com. I'm always happy to talk shop, even if Abstract isn't the right fit for you. Sometimes you just need someone who's been through it to help think through the problem.

And if you're curious about Abstract specifically, we're happy to do a no-pressure demo or even a POC against your own data. Let's see what's possible.

Thanks for reading. Now go check on your scheduled searches.

ABSTRACTED

We would love you to be a part of the journey, lets grab a coffee, have a chat, and set up a demo!

Your friends at Abstract AKA one of the most fun teams in cyber ;)

.png)

Your submission has been received.