What Is a Security Data Pipeline Platform (SDPP) and Why Do Security Teams Need One?

It’s true that cybersecurity needs more acronyms like we need more CVEs, but this one is worth explaining (even if no one really uses it and everyone continues to just say “pipeline” instead).

Why Security Teams Needed a New Kind of Pipeline

Yes it’s cliche to start a cybersecurity blog with “things are different than they used to be”, but that is exactly what happened. There’s more security telemetry than there was in the beforetimes. Cloud adoption, zero-trust architectures, and more sophisticated threat detection requirements have pushed data volumes very far beyond what traditional SIEM-centric architectures were designed to handle. Organizations now generate terabytes of logs daily from endpoints, cloud infrastructure, identity systems, and network devices.

Generic data pipelines can move data efficiently, but they weren't built with security outcomes in mind. They solve for movement and transformation, not for the specific challenges security teams face around detection latency, cost control, and operationally critical telemetry handling.

Security Data Pipeline Platforms emerged to address the scale, cost, and control problems that are unique to security data. Unlike general-purpose ETL tools or log collectors, SDPPs are built with the specific realities of modern security operations in mind. They now sit at the center of modern security architectures and act as the control plane between data sources and the tools that consume them.

What Is a Security Data Pipeline Platform (SDPP)?

A Security Data Pipeline Platform (SDPP) is a purpose-built platform for ingesting, transforming, enriching, and routing security telemetry at scale. It handles logs, events, alerts, and identity and cloud telemetry with the performance, reliability, and security-specific capabilities that generic pipelines lack.

The emphasis here is on platform, not tool. An SDPP isn't just a connector or a forwarder. An SDPP is a comprehensive system designed to give security teams control over their telemetry before it reaches SIEMs, data lakes, or detection systems.

Why Security Data Is Different

Security telemetry is high-volume, bursty, and operationally critical. A single security event can trigger thousands of related logs within seconds. Business analytics data can handle some (or sometimes quite a bit of) delay or sampling, but security data demands different treatment. Latency and data loss in security pipelines directly affect detection and response times. If a critical alert is delayed by even minutes, attackers gain advantage. If logs are dropped due to volume spikes, blind spots emerge in your security posture.

The cost behavior of security data also differs from general analytics workloads. Security teams often need to retain data for compliance reasons, query it infrequently, but have it instantly available when investigating incidents. This creates unique economic pressures that generic data platforms don't account for.

Generic pipelines struggle in security contexts because they lack built-in understanding of security data formats, threat intelligence enrichment, detection logic, and the real-time requirements that security operations need.

Why SIEM-Centric Architectures Started to Break Down

Traditional SIEMs were never designed to be the first place data gets shaped, the only detection runtime, or the controller of all telemetry routing. Yet over time, they became exactly that for many organizations. This centralization created several pain points. Ingestion-based pricing models meant that every new data source added cost, even if teams only needed that data occasionally. Detection latency increased because events had to be indexed before they could be queried or trigger alerts. Proprietary rule languages and data formats created lock-in, making it difficult to move detections or data to other tools.

The outcome was predictable. Cost became the proxy for risk. Teams trimmed retention, sampled noisy sources, and postponed new telemetry to stay within budget. Those choices controlled spend, but they also eroded visibility and quietly increased the likelihood of missed or delayed detections.

Core Capabilities of a Security Data Pipeline Platform

High-Scale Security Telemetry Ingest

SDPPs are built to handle sustained throughput of hundreds of gigabytes to terabytes per day, with the ability to absorb sudden spikes without dropping data. They support diverse protocols and formats, from syslog and APIs to cloud-native streaming interfaces.

Security-Aware Parsing and Normalization

Rather than treating all data as generic text or JSON, SDPPs understand security-specific formats like CEF, LEEF, and vendor-specific log structures. They normalize fields to common schemas, making it easier to write detections and correlations that work across multiple data sources.

Enrichment at Ingest Time

SDPPs can enrich events as they flow through the pipeline, adding threat intelligence, geolocation data, asset context, or user information. This enrichment happens before data reaches expensive storage or analytics systems, reducing the processing burden downstream.

Data Masking and Privacy Controls

Sometimes you want your logs to have a little less information while still maintaining security context. SDPPs can identify and mask sensitive information in security telemetry before it reaches storage or analysis systems. This includes redacting personally identifiable information (PII), credit card numbers, API keys, or other confidential data that might show up in logs.

Intelligent Routing and Fan-Out

Not all security data needs to go to all systems. SDPPs enable intelligent routing based on content, metadata, or business logic. High-fidelity alerts might go directly to a SIEM, while verbose debug logs route to cheaper long-term storage. The same event can be sent to multiple destinations simultaneously when needed.

Cost and Volume Control

SDPPs provide filtering, sampling, and transformation capabilities that help teams control data volumes and associated costs. Teams can drop noisy fields, deduplicate events, or apply sampling rules to high-volume sources while preserving critical security signals.

SDPP vs. Generic Pipelines and ETL Tools

Generic ETL tools were designed for batch processing and analytics workloads. While many have added streaming capabilities, they still lack the security-specific context and real-time requirements that security operations demand.

The fundamental difference lies in batch versus streaming realities. Security detections can't wait for hourly batch jobs. An authentication anomaly or malware callback needs to trigger alerts within seconds, not after the next scheduled transformation run.

How SDPPs Fit into a Modern Security Architecture

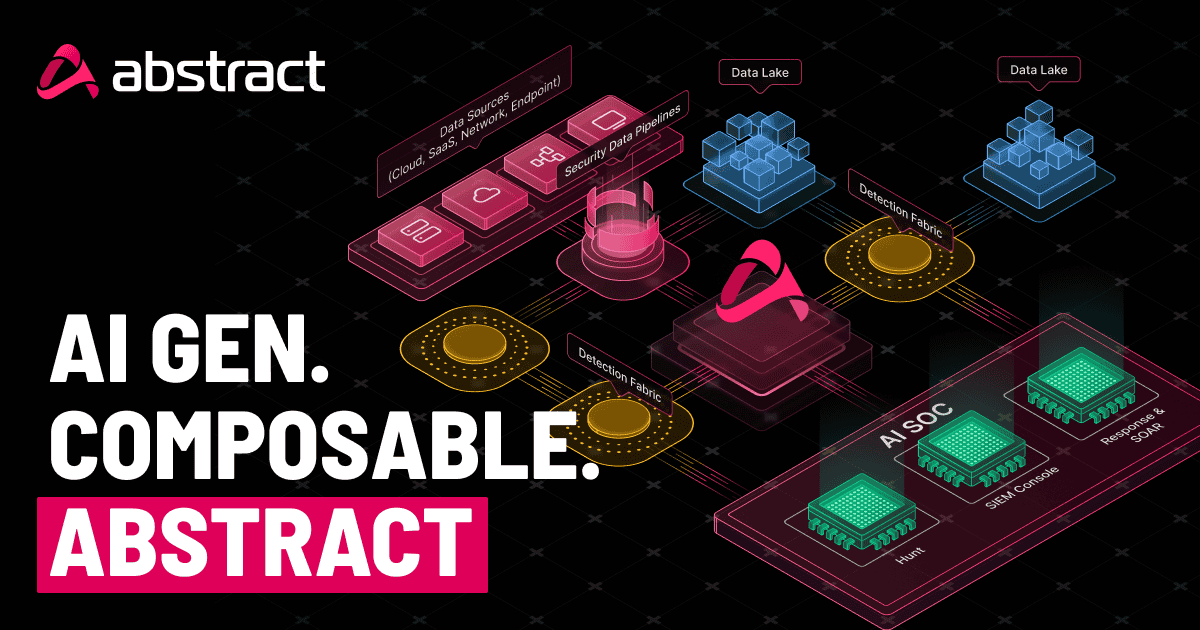

In a modern security architecture, the SDPP sits between data sources and security tools. It ingests telemetry from endpoints, cloud services, network devices, and applications. It then processes, enriches, and routes that data to SIEMs, data lakes, automation platforms, and AI-powered analysis systems.

This positioning enables a fundamental architectural principle: decoupling sources from destinations. Data sources don't need to know about every tool that might consume their data. Security tools don't need native integrations with every possible data source. The SDPP handles translation and routing, making the architecture more flexible and maintainable.

SDPP vs. SIEM: Complement, Not Replacement

SDPPs don't replace SIEMs. They complement them by serving different purposes in the security architecture.

An SDPP acts as the data control plane, handling the complexities of ingestion, transformation, and routing before data reaches analysis tools. The SIEM remains the analytics and investigation UI where analysts search, correlate, and investigate security events.

Separating these responsibilities improves flexibility. Organizations can route some data to a SIEM for active detection, other data to a data lake for long-term retention, and still other data to specialized tools for compliance or threat hunting. The SDPP makes this orchestration possible without duplicating ingestion or losing control of the data flow.

When Organizations Typically Need an SDPP

Several signals indicate that an organization would benefit from implementing an SDPP:

SIEM costs are outpacing the value received. When ingestion bills grow faster than detection quality or team capabilities, it's time to reconsider the architecture. An SDPP can route data more intelligently, sending only high-value telemetry to expensive SIEM storage.

Tool sprawl creates duplicated ingestion. If multiple security tools are independently ingesting the same data sources, you're paying multiple times for the same telemetry. An SDPP centralizes ingestion and routes to multiple destinations from a single source.

Detection teams are blocked by ingestion and query constraints. When analysts can't onboard new data sources quickly, or when detection rules take too long to execute, pipeline-level processing can break these bottlenecks.

These patterns appear across enterprises, managed security service providers (MSSPs), and information sharing and analysis centers (ISACs). Any organization dealing with significant security telemetry volumes faces similar challenges.

Common Misconceptions About Security Data Pipeline Platforms

"Isn't this just log management?"

Log management focuses on collection and storage. SDPPs go further, providing real-time transformation, enrichment, routing, and the ability to run detection logic on data in flight. While log management is one capability, it's not the defining characteristic.

"Does this replace my SIEM?"

No. SDPPs complement SIEMs by handling data operations before information reaches the SIEM. Many organizations use SDPPs to make their existing SIEM more effective by sending it cleaner, more relevant data. The SIEM remains valuable for investigation, correlation, and analyst workflows.

"Is this more complexity?"

Initially, adding a new platform component might seem like it increases complexity. In practice, SDPPs reduce complexity by consolidating disparate ingestion methods, eliminating duplicate data flows, and providing a single place to manage transformation and routing logic. The operational burden typically decreases once implemented.

SDPP+: How Abstract Uses the Pipeline as a Foundation for a Broader Security Platform

An SDPP provides essential foundation capabilities: scale, control, and flexibility over security telemetry. But on its own, a pipeline doesn't make investigations easier, simplify analyst workflows, or reduce operational complexity. It solves data movement and basic processing, but security operations require more than efficient plumbing.

Platforms built on an SDPP foundation (like Abstract!) can extend these capabilities meaningfully.

They can:

- treat detections, investigations, and automation as connected objects rather than isolated workflows

- support multiple team workflows without forcing everyone into a single tool or UI

- enable architectures that lower SIEM spend without reducing security visibility.

Abstract is built on an SDPP foundation, but it intentionally goes beyond a strict SDPP definition. In addition to ingesting, shaping, and routing security data, Abstract can also apply analytics and detections directly in the pipeline, without requiring data to be committed to a single downstream system.

Moving Detections Closer to the Data

In traditional security architectures, detections happen after data is indexed in a SIEM. An event arrives, gets stored, and then detection rules query the stored data to identify threats. This process introduces latency between when an event occurs and when it triggers an alert.

A streaming detection model inverts this approach. Detection logic runs as data flows through the pipeline, before indexing or storage. Rules evaluate events in real-time as they pass through, triggering alerts immediately when conditions match.

This architectural shift has significant implications. Detection no longer needs to wait for indexing. Mean time to detect (MTTD) drops from minutes to seconds. And because detections run in the pipeline rather than in a specific SIEM, they become portable across tools.

Summary: Why SDPPs Are Becoming Foundational

Security Data Pipeline Platforms emerged to solve the scale and cost problems that broke SIEM-centric architectures. They've proven their value in controlling data volumes and reducing ingestion expenses.

But their importance extends beyond cost savings. SDPPs enable earlier detection, lower mean time to detect, and reduced vendor lock-in. They give security teams architectural flexibility to build operations around their needs rather than their tools' limitations.

FAQ

What's the difference between an SDPP and a SIEM?

An SDPP handles data ingestion, transformation, and routing, while a SIEM provides analytics, investigation, and detection capabilities. They work together, with the SDPP preparing and delivering data to the SIEM.

Can I use an SDPP with my existing security tools?

Yes. SDPPs are designed to integrate with existing security infrastructure, including SIEMs, data lakes, SOAR platforms, and threat intelligence tools.

How does an SDPP reduce costs?

SDPPs reduce costs by filtering unnecessary data, routing high-volume logs to cheaper storage, eliminating duplicate ingestion across tools, and enabling more efficient use of expensive SIEM licenses.

Do I need specialized skills to operate an SDPP?

While SDPPs require some technical expertise, they're generally easier to manage than coordinating multiple point solutions. Most platforms provide interfaces for defining routing rules and transformations without extensive programming knowledge.

How long does SDPP implementation take?

Implementation timelines vary by organization size and complexity. Initial deployment for cost control and basic routing might take weeks. Building out comprehensive detection capabilities and full integration across tools typically spans months.

Glossary

SIEM (Security Information and Event Management): A platform that aggregates, stores, and analyzes security event data to enable threat detection and investigation.

Telemetry: Security-relevant data generated by systems, applications, and infrastructure, including logs, events, metrics, and traces.

Mean Time to Detect (MTTD): The average time between when a security incident occurs and when it's detected by security systems or personnel.

Enrichment: The process of adding context to security events, such as threat intelligence, geolocation data, or asset information, to make them more actionable.

Fan-out: The ability to send a single data stream to multiple destinations simultaneously, enabling different tools to consume the same data.

Lock-in: A situation where an organization becomes dependent on a specific vendor's technology, making it difficult or expensive to switch to alternatives.

ABSTRACTED

We would love you to be a part of the journey, lets grab a coffee, have a chat, and set up a demo!

Your friends at Abstract AKA one of the most fun teams in cyber ;)

.png)

Your submission has been received.