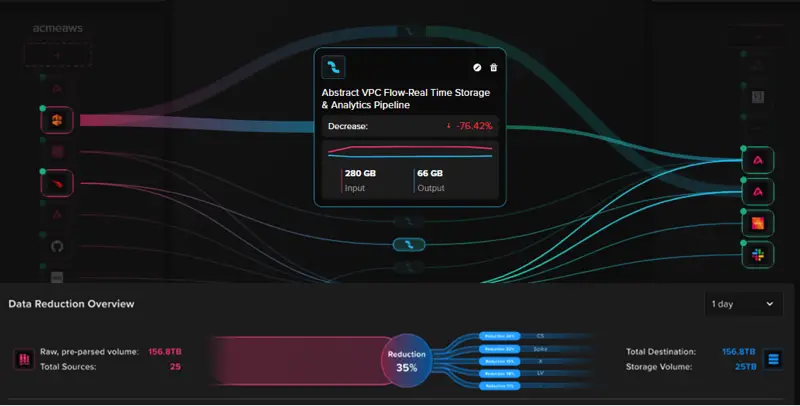

Data Reduction & Management

Filter Events Before They Hit Your SIEM or Data Lake

Abstract processes data in-stream — dropping noise, deduplicating, and aggregating redundant events before they reach any destination. Filtering can happen even earlier, at the source itself, before data is ever transmitted.

Run Detections on High-Volume Sources Without Paying to Store Them

Get the signal without the high storage bill.. Abstract can run streaming detections on super-voluminous sources like VPC flow logs, generating fully enriched, correlated alerts that route to your SIEM for further analysis, while the raw telemetry goes straight to more cost-effective storage.

Identify Which Data Sources Are Burning Your Budget

Get per-field visibility into which event types and sources consume the most volume. Make informed decisions about what to reduce — before committing to a routing change.

Intelligent Cold Storage

Store Years of Telemetry Without Rehydration Fees

LakeVilla is Abstract's cloud-native cold storage layer built on AWS S3, Azure Blob, or Google Cloud Storage. Query archived data instantly with no rehydration steps and no retrieval charges.

Replay Archived Logs Through Live Detection Workflows

Cold data doesn't have to stay cold. Send an archived dataset back through Abstract's detection engine for retroactive threat hunting, rule tuning, or validating new detections against historical events.

Normalize and Enrich Before Archiving — Not After

Data is pre-processed by Abstract's pipeline before it reaches a data lake: aggregated, normalized, schema-aligned, and threat-enriched. When you need it, it's already query-ready. No data swamp

%201.png)

.png)