Shrinking the Stack of Needles

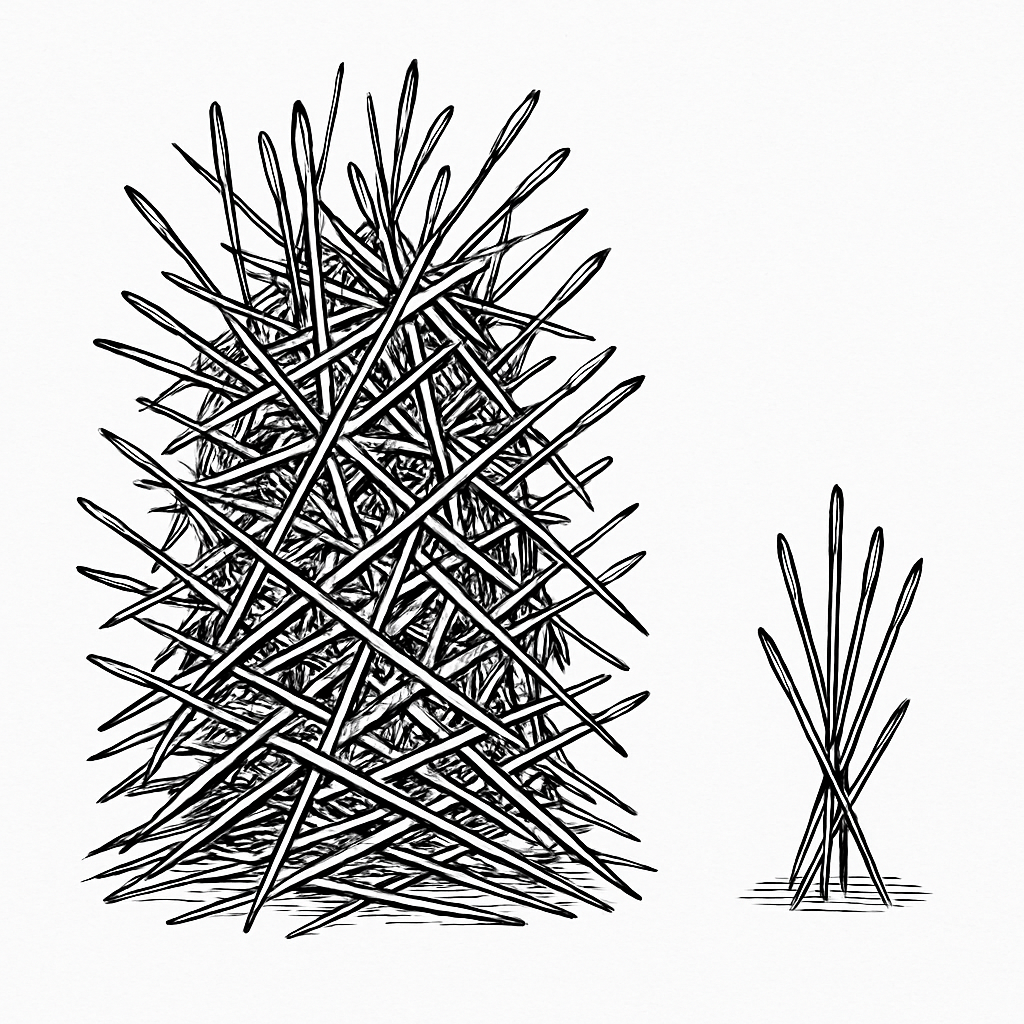

The "Needle in a Stack of Needles" Problem

There’s an expression I’ve heard for as long as I’ve been in cybersecurity: we’re trying to find the needle inside a stack of needles. It’s an evolution of the old “needle in a haystack” line, but cyber folks have always had a way of adapting metaphors to match a grimmer reality.

If you’re a security analyst, you live this every day. You’re constantly looking for better ways to detect threats, reduce your mean time to detection, and sharpen your response. Everything pushes you toward getting as close to “boom” (the moment something bad actually happens) as you possibly can. And because of that pressure, we’re always searching for new techniques, new tools, new workflows that will help us sort through this overwhelming volume more effectively.

But one thing we don’t spend enough time talking about is the size of the stack itself. We never stop to ask: Why is the stack of needles so big? And why do we continually accept that the only solution is simply to get better at sorting through it?

The Explosive Growth of Telemetry

The volume of telemetry organizations collect today is drastically larger than when I started my career. Back in the early 2000s, a large SIEM deployment was around 50,000 events per second, maybe five terabytes a day. Now, medium-sized environments operate at that scale, and the largest ones push a million events per second, which works out to roughly 100 terabytes a day. The growth hasn’t been linear. It’s been explosive.

Despite that growth, we rarely step back and ask if we need all this data in real-time analytic storage. When I ask people why they collect everything, the answers are predictable: compliance, regulations, visibility goals. I’ve asked CISOs to describe their top priorities for the year, and many say something like, “We’re going to collect data from all our cloud systems now.” They think they’re gaining more visibility, but decisions like that guarantee the stack gets even bigger.

Mapping Detection to Data Collection

The harder question, the one we tend to avoid, is what are we actually doing with all of this data? Some of it is clearly useful for detection. But a huge amount of what we collect isn’t ever going to trigger a rule, feed a model, or help an analyst. If your detections focus on authentication patterns and privilege escalation, you probably don’t need system-level performance metrics in your real-time analytics pipeline. That kind of data could just as easily live in low-cost object storage, ready for long-term retention, compliance reporting, or forensic needs, without bloating the systems analysts depend on day to day.

To get there, we need to think source by source. What events come from each system? Which ones matter for detection? Which ones have nothing to do with any scenario we’re trying to identify? Consider Windows event logs: if your rules focus on authentication activity or privilege escalation, why ingest system-level events about memory or CPU usage? Those events often make up the bulk of the data volume, but they rarely, if ever, contribute to detections.

If we could map our detection scenarios to our collection strategy, we’d quickly see that only a fraction of the data actually needs to flow into the high-performance “stack of needles.” The rest could be routed somewhere cheaper and quieter, where it still has value but doesn’t overwhelm analysts or slow down searches.

Beyond the Log Lemming Effect

And that’s really the point. How long does it take to find something when you’re searching across hundreds of terabytes? How fast can an investigation move when every search touches everything? If you reduce the size of the stack, you improve speed, reduce cost, and give analysts a dramatically better experience.

I call the opposite behavior the log lemming effect, this instinctive belief that we have to collect everything, that every log must be held in the most expensive storage tier, because something might matter someday. But the better question is: Do you need it for detection right now, or do you simply need to have it available somewhere?

This is the mindset shift we work on every day at Abstract. Security data pipelines combined with streaming detection let you reduce the volume of data that lands in your analytic stack without sacrificing visibility. In fact, you improve it, because you’re no longer drowning in needles.

The needle-in-a-stack-of-needles problem isn’t going away on its own. But we do have the ability to make the stack smaller. That alone can make all the difference. Before adding a new data source, ask whether it will actually power a detection, or just make the stack of needles bigger.

ABSTRACTED

We would love you to be a part of the journey, lets grab a coffee, have a chat, and set up a demo!

Your friends at Abstract AKA one of the most fun teams in cyber ;)

.png)

Your submission has been received.